- Semiconductor Technology Now

Report Series

Two approaches to image recognition

In an automated driving system, the data collection process provides information on the vehicle's surroundings, which is then analyzed for recognition so that appropriate behavioral decisions can be made. Let us now focus on the technologies that have bearings on analysis/recognition and behavioral decision-making.

In the area of analysis and recognition, the most actively studied technology is that for identifying persons and objects (such as vehicles, bicycles, etc.) in given 2D image data and extrapolating their positions and movements in a 3D space. There are two types of image recognition algorithms for automated driving; one is structure estimation using stereo image parallax, and the other is pattern recognition for identifying objects based on the characteristics of shapes and motions captured by a monocular camera.

Structure estimation uses a deviation between images taken by a stereo camera to determine the 3D structure of an object. Since this technique can distinguish the road surface from obstacles fairly easily, it has been adopted from early on in pre-collision braking systems, such as Subaru's EyeSight.

Pattern recognition technology has improved rapidly in the 2000s due to the progress in machine learning, making it possible to detect pedestrians and vehicles with greater accuracy than ever before. The technology has been used for active cruise control (ACC) and collision prevention systems. A representative application of this technology is Mobileye's EyeQ, an image processor chip adopted by BMW, General Motors, Volvo and other carmakers.

Cloud and AI improve accuracy of recognition

Identifying an object on the road and deciding on the optimum move for the car requires very sophisticated processing. Imagine a branch of a roadside tree has broken off and dropped on the road. If the branch is small enough, the car can move straight ahead and crunch over it. But if the branch is very thick and large, it has to be averted. To make the right decision, the system must be able to measure the size of the object as well as recognize what it actually is.

To make this kind of sophisticated judgement, the use of artificial intelligence (AI) capable of deep learning is contemplated. NVIDIA's Drive PX is a case in point.

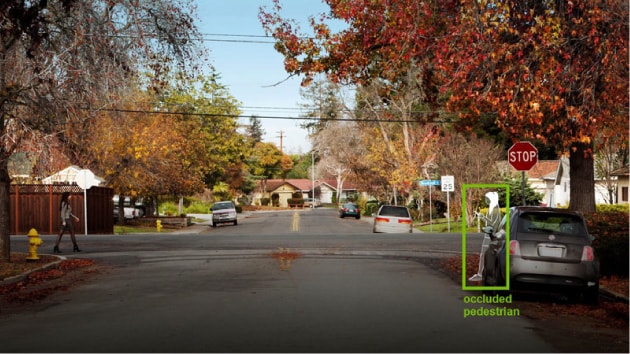

Drive PX is a technology using AI-based Deep Neural Network to accurately detect and classify objects captured by onboard cameras, allowing the vehicle to assess its surroundings. A prototype vehicle developed by NVIDIA has a graphics processor capable of parallel-processing image data captured by 12 HD cameras. The system is capable of recognizing a cyclist partially hidden behind other cars and obstacles (Figure 6).

|

The Deep Neural Network is unique in that it involves onboard chips and datacenter working in tandem. Supercomputers at the company's datacenter perform deep learning to optimize the software, which is then transferred to the onboard chips. When individual vehicles on the road encounter new situations requiring impromptu responses, the information is uploaded to the cloud. Once the cloud learns the optimum response, the updated parameters are downloaded to the chips on all vehicles concerned. Audi has already announced the intent to adopt this system.

Instantly modeling the vehicle's surroundings in 3D

So far, we have covered the analysis and recognition process that determines the position, direction of movement, and speed of the vehicle and other objects in its vicinity. The next process involves converting the detected objects into models and placing them on an up-to-the-second 3D map of the surroundings, or the dynamic navigation map, so that the vehicle can take appropriate action. The mapping process is similar to the real time generation of 3D graphics in video games.

Actually, this is technologically the most challenging process in an automated driving system. The virtual environment modeled electronically always contains some margin of error when pitted against the real-world counterpart. The sensors' measurement data may be pretty accurate but are never perfect, and the same is true about the control of the car's mechanisms. If we blindly trust the sensors' data, unexpected accidents could occur. So it is important to pursue reliable vehicle control while being fully aware that some margin of error is just inevitable.

Controlling vehicles without blindly trusting sensor data

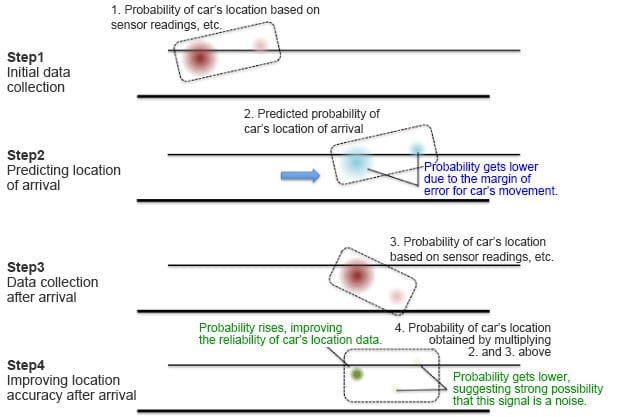

The Google Car has attained a high level of control taking into account the sensors' margins of error, based on an approach known as probabilistic robotics (Figure 7). The method requires the positions and movements of the car and surrounding objects to be rendered in a deliberately ambiguous manner on a dynamic navigation map, so that more reliable location information can be obtained using advanced algorithms. Specifically, the procedures are as follows:

|

The first step is to describe the car's location determined by sensor readings in terms of probabilities considering the margin of error (Step 1 in Figure 7). If more than one sensor is involved, multiple sensor readings with different margins of error are used to determine the location, assuming that the car is located where the probability is the highest. In the next step, behavioral decisions are made based on the assumed location, and the car is virtually moved to the next position (Step 2 in Figure 7). However, any real-world movement of the car is subject to margins of error. Therefore the virtual location of the car after arrival is expressed in terms of probabilities. Since margins of error in sensor readings and control are combined, the ambiguity of the car's location increases.

The car is then actually moved to the next position, and its location is measured again using the sensors (Step 3 in Figure 7). Finally, the probability of the virtual location of the car calculated in Step 2 and the actual probability of the car's location based on sensor readings are multiplied together (Step 4 in Figure 7), with the result that the probability is enhanced where the difference between virtual and real positions is small, and the probability diminishes in areas where the difference is larger. The four steps are repeated as the car keeps moving, and the iteration of this cycle improves the accuracy of prediction.

An accurate map makes behavioral decision-making fairly easy

Once an accurate dynamic navigation map has been drawn, the only remaining task is to make behavioral decisions. This process involves determining the car's lines of movement and speeds using a virtual 3D map that mirrors the car's surroundings. It also involves calculating the best routes and lanes to take and the optimum maneuvers for reaching the destination. If special actions such as parking and making U-turns are involved, those movements must also be planned ahead.

One would think that behavioral decision-making requires highly complex technologies, but once an accurate dynamic navigation map is at hand, calculating the line of movement and speeds can be surprisingly easy. In fact, the processing is similar to that used in car racing game software. If you've played a racing game, you know how other cars zoom past you skillfully without crashing while you clumsily crawl ahead. It's the smooth and intelligent movement of those computer-generated cars that real self-driving cars would mimic.

The difference between racing games and real automated driving is that the latter requires separate sets of information for different control mechanisms such as the accelerator pedal, steering wheel, brakes, and gearbox. Therefore it is critical to make coordinated behavioral decisions to ensure these mechanisms work together in a smooth and efficient manner.

The technology for automated driving is moving ahead at a furious pace. There is no doubt that many innovative approaches will be introduced in the future, eventually delivering systems that can drive cars faster and more proficiently than any human can.

Writer

Motoaki Ito

Motoaki Ito is the CEO of Enlight, Inc. which he founded in 2014. The company leverages its expertise in communicating the value of specific technologies to the target audience, and offers marketing support mainly to technology companies. Prior to setting up Enlight, Ito worked for Nikkei Business Publications (Nikkei BP) for four years as an advertising producer of its technology information group, with a main focus on marketing support. Before that, he spent six years as a consultant at TechnoAssociates, Inc., a joint think tank between Nikkei BP and Mitsubishi Corporation, supporting the clients' manufacturing operations.

Prior to that, he worked for 12 years as a journalist, editor, and editor-in-chief for various publications including Nikkei Microdevices, Nikkei Electronics, and Nikkei BP Semiconductor Research, after a three-year stint at Fujitsu developing semiconductors as an engineer.