JavaScriptが無効になっています。

このWebサイトの全ての機能を利用するためにはJavaScriptを有効にする必要があります。

- Report Series

A Guide to Fast Optimal Solutions to Complex Problems for Quantum Computers

- Text by Yoshitaka Arafune

- 2021.12.14

Most people have already heard the term “quantum computer.” There has been a lot of interest in quantum computers over the last few years, with great expectations that they will dramatically change the world soon. These days, we use computers all the time in our daily lives. Personal computers and smartphones are obvious computers, but there are many more computers hidden in plain sight around us in cars, televisions, microwave ovens, air conditioners, and even in rice cookers. Computers enrich our lives and make them more convenient. Quantum computers operate on completely different principles to the computers we are using now. This report explains what quantum computers are, and why they are attracting so much interest.

“Human Computers”: The Origin of the Word “Computer”

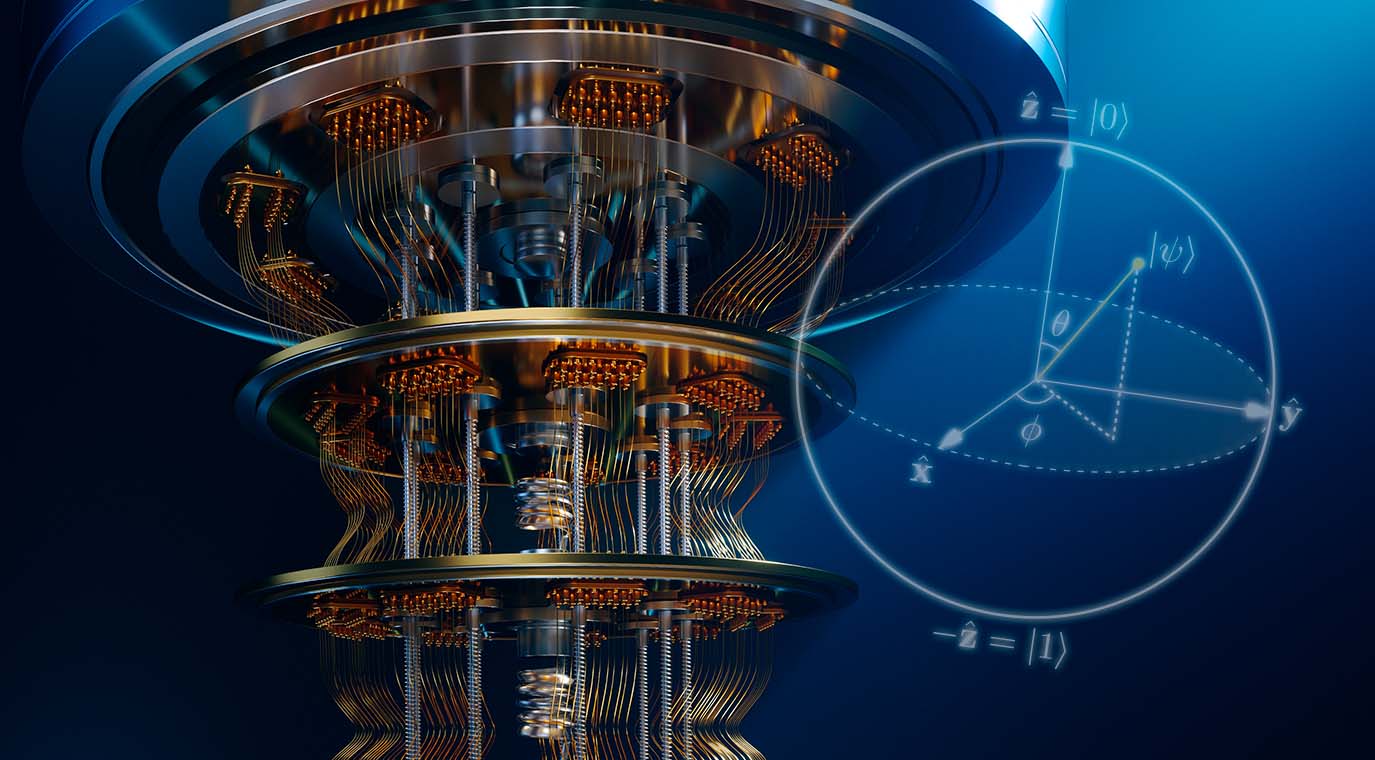

The IBM Quantum System One created headlines when it became operational in Kawasaki City, Kanagawa Prefecture in July 2021 (Figure 1). This was IBM’s third quantum computer installation after the U.S. and Germany. In addition to the University of Tokyo and Keio University, companies like Toshiba, Toyota, and Hitachi will use the quantum computer to search for potential business applications.

- Figure 1. IBM Quantum System One quantum computer

- Credit: “IBM Quantum System One (Japan)” by IBM Research is licensed under the Creative Commons Attribution-NoDerivs 2.0 Generic (CC BY-ND 2.0).

Quantum computers are expected to solve problems that even supercomputers cannot solve, which is why they are attracting so much interest. Before discussing quantum computers, it is important to first understand the computers currently being used. When people speak about today’s computers, they are referring to electronic computers that run on electricity; however, the word originally referred to humans who computed mathematical problems. Complex calculations used to be performed by hand, and the people who performed these computations were called computers (Figure 2).

Other tools including manual calculators, abacuses, and slide rules also could be called computers because they also calculate and handle information. However, each of these computers needs a proficient human operator to be able to compute adequately. In this sense as well, the human computer was a central part of the process.

- Figure 2. Human computers

- Human computers worked on tasks related to research, development, and operation of unmanned space probes at the NASA Jet Propulsion Laboratory.

Credits: NASA/JPL-Caltech

From the second half of the 20th century, the composition of this image changed. With the development of electronic computers, it became possible to perform calculations without human intervention. From around this time, the word computer began to be used for machines that compute rather than humans that compute.

Computer Calculations Using On/Off Switching

These days, the word “computer” refers to electronic computers that use electronic circuits comprised of many electrical switches. Electricity flows when a switch is on and does not flow when the switch is off. The difference in the state of these switches—whether on or off—is used to calculate whether the electrical current is powered or not. Incidentally, in computers, the off state of switches is represented by the number “0” and the on state is represented by the number “1.” It is normal for humans to use either whole or decimal numbers in day-to-day calculations, but computers can only use numbers 0 or 1. As a result, they only calculate using binary numbers that can only represent either 0 or 1.

The modern computer uses transistors, which are electronic components that switch on and off. Connecting these transistors together creates a circuit in which turning a single transistor switch on or off can turn connected transistor switches on or off. In this way, the state of transistors across an entire circuit can be continuously changed. This enables the computer to perform extremely complex calculations automatically.

Early transistors were made of germanium, but they were later replaced with transistors made from low cost and readily available silicon. In the 1960s, the development of integrated circuits (ICs) – single chips comprised of many small transistors – meant that computers could suddenly be reduced in size.

In the 1970s, large-scale integrated circuits (LSIs) enabled many more transistors to be packed onto the same size chip. Computer performance increased further, and prices dropped, which enabled their use in homes rather than being limited to only companies or research laboratories. Computer performance has increased annually ever since the first transistors were used.

Miniaturization of Transistors Leads to Dramatic Progress of Computers

The progress of computers was enabled by the miniaturization of transistors and the associated integration of electronic circuits. Modern computers represent, calculate, and process information using combinations of on and off states of switches. Computer performance can be improved by increasing the number of transistors fabricated on a single chip.

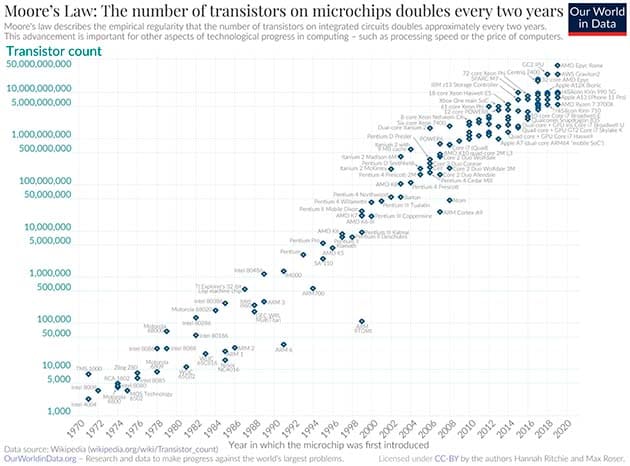

In 1965, Intel Corporation’s co-founder Gordon Moore observed that the number of transistors that can be packed onto a semiconductor chip of the same area will double approximately every 18 months. This observation, which was known as “Moore’s Law,” has accurately described the integration and performance of semiconductor chips for more than 50 years (Figure 3).

- Figure 3. Moore’s Law and actual performance of semiconductors

- Moore’s Law was an observation made in 1965, but for more than 50 years since then, the integration of transistors has mostly followed this law.

Credit: “A logarithmic graph showing the timeline of how transistor counts in microchips are almost doubling every two years from 1970 to 2020; Moore's Law.”by Max Roser, Hannah Ritchie is licensed under the Creative Commons Attribution 4.0 International license.

The increasing integration of transistors has enabled computers to also improve in performance dramatically. This led to the development of system LSIs that incorporate circuits with multiple functions onto a single chip, including central processing unit (CPU) functions that calculate and control memory functions and input-output device functions. As a result, a long series of compact, high-performance computers began to appear.

The smartphone provides the best example of computer miniaturization. Rather than just being a telephone, a single smartphone is equipped with a range of readily available and easy-to-use functions, including maps, cameras, and music players. Silicon microfabrication technologies have enabled this integration of transistors. The transistors fabricated on silicon chips are becoming increasingly smaller, with the ability to fabricate 10-nanometer transistors (one billionth of a meter) to date, enabling transistors to be one ten-thousandth of the width of a strand of human hair.

The Limits of Transistor Miniaturization

Some of the computers currently on the market use chips with 7- or 5-nanometer transistors. In fact, IBM announced in May 2021 that it had developed semiconductors with transistors as small as 2 nanometers. It appears that the integration of transistors, and the subsequent improvement in computer performance, will continue to follow Moore’s Law.

However, many people suggest that Moore’s Law may soon reach its limit, with one of the reasons being the limit to which transistors can be miniaturized. The semiconductor chips used in computers are made with silicon microfabrication. Until now, the norm was to fabricate smaller and smaller transistors on silicon chips, but no matter how small the transistors became, they could not be smaller than an atom. A single atom measures approximately 0.1 nanometers. Because transistors are already as small as 2 nanometers, there are fears that trying to reduce their size further would mean they could no longer act as switches and Moore’s Law would collapse. When the integration of transistors does peak, it is unlikely that computer development would continue at its current pace.

With current computers, each instruction in a program is executed as a series of separate operations. While each instruction might be a simple operation, it is the combination of many of these instructions that enables a complex task to be performed. Because computer processing speed has increased through transistor integration, computers have been able to quickly process data even while dealing with vast amounts of data that are beyond human capabilities.

The Traveling Salesman Problem That Even Bothers Computers

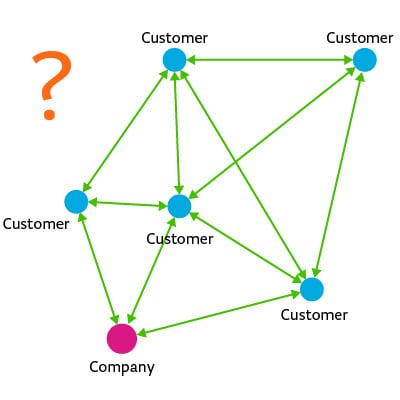

It is also possible to dramatically improve calculation speeds by looking at how programs are designed to run on computers and then making small changes to their methodology. However, even with ideas like this, there are problems that are still difficult for the current generation of computers to solve. A typical example of this is the traveling salesman problem (Figure 4).

The traveling salesman problem consists of a single salesman who must travel to multiple cities and wants to find the most efficient route to take. For example, if there are three cities to visit, there are three possible routes that can be compared to find the best route. However, the number of possible routes increases to 12 routes for four cities and 60 routes for five cities, with the number of potential routes increasing exponentially as the number of cities to visit increases.

- Figure 4. Illustration of the traveling salesman problem

- The traveling salesman problem consists of a single salesman who must travel around multiple cities to visit customers and wants to find the most efficient route to take. Although no method has so far been found to solve this problem, research is underway to find a method for calculating good solutions as quickly as possible. This is because of the potential application of such a solution to a range of problems in society, including delivery routes for parcel delivery services and operating schedules for machine tools.

Present-day computers have difficulty solving problems that require thinking about optimal combinations. Problems like these require each possible combination to be examined separately, so the computer may not be able to find the optimal solution no matter how fast its processing speed, or it may still take too long to find the solution, rendering it impractical.

Quantum Computers Calculate in a State of Superposition

Quantum computers are expected to be able to solve these problems. Expectations are that quantum computers will also calculate faster than current computers in a range of other fields, including factorization into prime factors, decryption, chemical reaction calculations, and artificial intelligence. So how do quantum computers actually perform calculations?

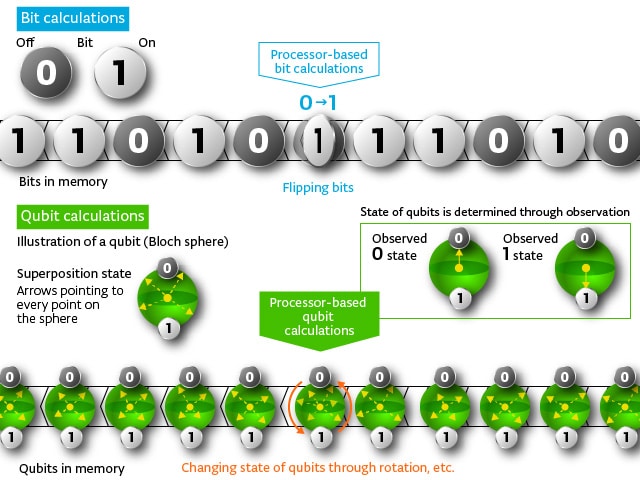

Current computers calculate by treating the presence or absence of electric signals as 0s and 1s. These 0s and 1s, which are the smallest unit of data in calculations, are called bits. That data can be rewritten at high speed by turning the switches on or off to perform a range of calculations. The result is then converted to text, images, or another format that can be displayed on a screen. The smallest unit of data for quantum computers is also the bit, with calculations performed by manipulating those bits.

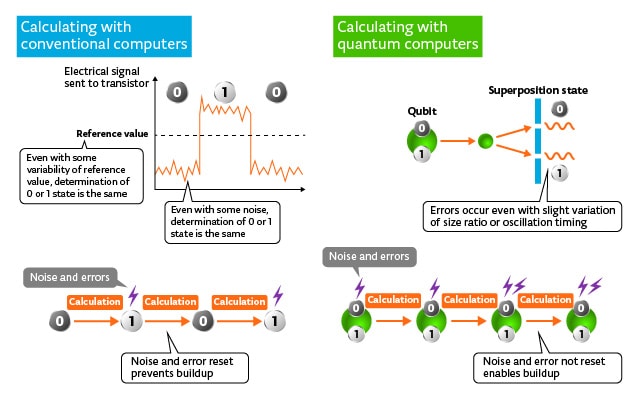

Looking at this explanation alone, there may not appear to be any differences from normal computers. However, the bits that quantum computers manipulate are called quantum bits, or qubits, which differ from normal computers. Qubits can be represented as a 0 and 1 at the same time, unlike normal bits which can only be represented as either 0 or 1. This state is called superposition (Figure 5).

- Figure 5. Illustration of bits and qubits

- While the state of bits used in normal computers can be either 0 or 1 only, qubits represent a superposition of 0 and 1 states for calculating.

Superposition Reduces Calculation Iterations

Qubits can be in either the 0 or 1 state when in superposition, but the superposition state collapses randomly to the 0 or 1 state when observed by a person. Effective use of this property in calculations can reduce the numerous calculations required when using a normal computer to just one calculation to find a solution.

For example, on a normal computer, 10-bit data can be represented as 1,024 different patterns (2 to the power of 10). However, only one of those 1,024 patterns can be represented at any one time. To represent all patterns, the data must be changed 1,024 times. When some condition is attached to the data, at least 1,024 calculations must be performed before the optimal pattern can be selected.

However, all 1,024 possible patterns can be represented simultaneously by using 10 qubits in a superposition state of 0 and 1. Equally, qubits can use a single calculation to select the optimal pattern for some condition from among those patterns. Quantum computers are therefore very good at solving problems where an optimal solution must be selected from many possible candidates, which current computers find difficult.

Quantum computers perform calculations using qubits, which can represent 0 and 1 states simultaneously. Qubits seem quite mysterious to the normal way of thinking. However, in the world of quantum mechanics, which is a theory that explains the behavior of extremely small particles like electrons and atoms, qubits are neither mysterious nor remarkable. So, an explanation of quantum mechanics is important.

Quantum Mechanics Deals with Events in the Micro World

Like the theory of general relativity, quantum mechanics is one of the two pillars supporting modern physics. Whereas the theory of general relativity is used when describing extremely large things like the universe, quantum mechanics is used when dealing with events occurring in an extremely small space such as the inside of an atom. Another contrasting factor is that quantum mechanics was developed by several physicists while the theory of general relativity was developed by a single person, Albert Einstein.

Quantum mechanics was first introduced by German physicist Max Planck when he presented his quantum hypothesis in 1900. The steel industry was flourishing in Europe during the latter half of the 19th century, with many physicists working on developing techniques for accurately measuring the temperature of molten iron in blast furnaces. While these physicists were able to describe short wavelength light, they were unable to demonstrate a theory that accorded with the results of experiments when using long wavelength light.

Wave Properties and Particle Properties

At the time, there were many natural phenomena that could be explained by assuming light to be a wave. Therefore, there was a strong belief that light energy was continuously changing. Planck reexamined this idea and proposed a quantum hypothesis wherein light energy consists of the smallest possible discrete bundles, and the energy changes with each bundle. Incidentally, the word “quantum” means the smallest possible discrete unit of energy. The quantum hypothesis proposal showed the possibility that light, which had previously been considered to have the properties of waves, could actually have the properties of particles.

Then, in 1905, Einstein introduced his photon hypothesis to explain the photoelectric effect in which electrons are emitted from metal when light is shone on it. Incorporating Planck’s quantum hypothesis, he considered light to be a collection of particles with energy. For a long time, there was great controversy over whether light was a wave or a particle, but it has now been concluded that light has properties of both waves and particles. This property of behaving like waves and particles, like light, has come to be known as “quantum.”

It is strange that something could have the properties of both waves and particles. This means for example that even a single photon, a single particle of light, will spread out like a wave.

This property is not exclusive to light. In 1924, Frenchman Louis de Broglie proposed his hypothesis of matter waves in which electrons, which were previously considered to be particles, also had properties of waves. The internal structure of atoms has been known from the beginning of the 20th century, with the understanding that each atom consists of any number of electrons flying around its nucleus. These electrons were thought to follow a set orbit within the atom, but no-one was able to sufficiently explain the reason why. However, by incorporating de Broglie’s matter wave hypothesis, they were able to explain how those electrons only existed within specific orbits.

The matter wave concept was later described in an equation developed by Austrian Erwin Schrödinger. Several experiments were further conducted to confirm the wave behavior of electrons, which led to the understanding that all matter are quanta that have the properties of both waves and particles. As research into quantum mechanics progressed, it became clear that small particles like electrons and atoms behave in a way that humans could not possibly have thought.

Quantum World Is a World of Probability

To start with, it is only possible to represent events occurring in the quantum world in terms of probability. For example, while the electrons surrounding the nucleus can be considered to exist as waves, the wave state collapses and particles appear at certain locations. Through observation, the location of electrons might appear to be randomly changing, but with repeated observations, it becomes possible to observe electrons many times at locations where their probability of existence is high. In other words, electrons can exist at the same time as having the possibility of randomly existing. This is the superposition state. However, at the point of observation of this superposition, the state collapses randomly to a single location. Many physicists, including Einstein and Schrödinger, who led the quantum mechanics debate until then, had resisted this approach based on probability.

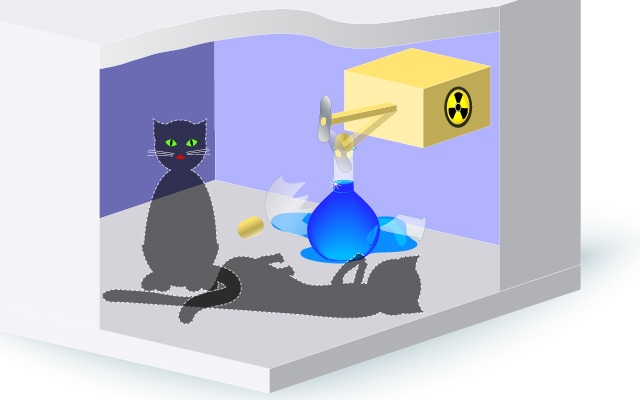

Schrödinger’s Cat

Einstein said the famous words “God does not play dice” to criticize the theory of probability. As his own criticism of the theory of probability, Schrödinger devised a famous thought experiment that later became known as “Schrödinger’s cat.” The thought experiment went like this. A cat, a radioactive element with a half-life of one hour, and a radiation sensor are placed inside a box. If the radiation sensor detects radiation, a poison gas is released (Figure 6).

After an hour, there is a 50% possibility of the radioactive element having decayed. Until the point of observation, however, the radioactive element is in a state of superposition where it has both decayed and not decayed. This means that the cat is also in a superposition state where it is both dead and alive. If the radioactive element had decayed, the poison gas had been emitted and the cat would have died. But if the radioactive element had not decayed, the cat would still be alive.

Schrödinger stressed that this quantum superposition state was not possible because if the quantum superposition is accepted, then the superposition state of the cat being both alive and dead must also be accepted. However, when further research showed that quantum superposition was in fact possible, the meaning of Schrödinger’s cat was changed to help explain what observation is and where the lines are between the world of quantum mechanics and the ordinary world of Newtonian mechanics.

- Figure 6. Illustration of Schrödinger’s cat

- Schrödinger’s cat is a thought experiment originally proposed by Schrödinger to criticize the quantum theory of probability. However, it is often used these days as an explanatory example of superposition.

Quantum Computers Being Developed

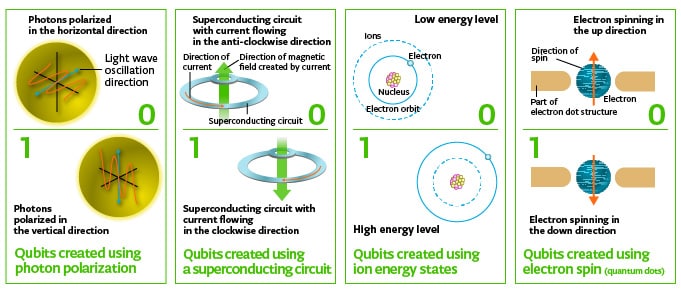

Quantum computers are based on the theory of computation proposed in 1985 by British physicist David Deutsch. A quantum computer is a machine that calculates by using numerous connected qubits that deal with quantum information. Several methods for creating qubits have been proposed.

Of them, the method that has progressed furthest is one that uses superconducting circuits (Figure 7). In this method, there are some metals or other substances that show a superconducting phenomenon of zero electrical resistance when cooled down to close to absolute zero (minus 273.15°C). A state of superposition can then be created by passing a current, representing 0 or 1, through a special circuit in this superconducting state.

Despite the drawbacks of the superconducting circuit, in particular that the superposition state can only be maintained for a short period of time and errors occur easily with many qubits coupled together, research is continuing into the design of circuits to create qubits that are easier to handle. Incidentally, the IBM Quantum System One mentioned at the start of this column is also a superconducting quantum computer. IBM has also launched its IBM Q Experience service enabling the quantum computer to be operated via the internet.

Other research and development into qubit-creation methods include an ion method that traps ions inside a vacuum chamber, an optical method that uses polarization of photons, an electron method that traps electrons inside a semiconductor chip, and another electron method that floats electrons above liquid helium in a vacuum.

- Figure 7. Various methods of qubit creation being researched

- Qubits created by the superconducting method are used in special circuits cooled to ultra-low temperatures to create a superconducting state of zero electrical resistance. The direction of current flowing in the circuit and other methods are used to represent 0 and 1 to create a state of superposition.

Challenges and Outlook for Quantum Computer Development

Although quantum computers that can be used already exist, the problem is that there are too few qubits. Qubits are fragile and delicate, so increasing their quantity and size is quite difficult. Furthermore, mechanisms for correcting errors must be incorporated before accurate calculations are possible. At present, there are still too few qubits, so development is not ready to start calculating with error correction mechanisms. It is currently only the basic research stage, with practical application still a long way off (Figure 8).

- Figure 8. Weaknesses of quantum computers

- Quantum computers calculate using qubits in the state of superposition, with mistakes occurring even with only a little noise or error. To achieve a practical application, manipulation of electrons and photons needs to be extremely accurate to enable accurate calculations, and there needs to be some mechanism for correction.

In October 2019, Google’s research team announced that it used a 53-qubit quantum computer to solve a problem in 200 seconds that would have taken 10,000 years to solve using today’s most advanced supercomputer. The comparison was made using a problem that can be easily solved using a quantum computer, so this does not mean that quantum computers will suddenly make our lives easier.

This announcement showed that quantum computers can be used to quickly solve problems that supercomputers find difficult and that take a lot of time. As quantum computer research progresses, it is possible that quantum computers will be developed with more and more qubits fabricated on a single chip, just like computers in the past that used increasingly more and more transistors. If quantum computers equipped with billions of qubits are developed, they will make dramatic changes to our society. By that time, it is likely that there will also be many software programs developed to operate those quantum computers. Just maybe, the time will come when people walk the streets carrying a quantum computer as they do now with regular computers and smartphones.

[References]

(publication titles translated from the Japanese)

- Understanding Quantum Computers, Shuntaro Takeda

- What Is a Quantum?, So Matsuura

- Future Changed by Quantum Computers, Masayoshi Terabe and Masayuki Ohzeki

- Quantum Computers Accelerating Artificial Intelligence, Hidetoshi Nishimori and Masayuki Ohzeki

- Quantum Theory for Beginners, Sten Odenwald

- Newton, May 2018 Issue

- Newton, July 2021 Issue

- #tag

-

- Albert Einstein

- Atoms

- Bits

- Computers

- David Deutsch

- Electrons

- Erwin Schrödinger

- God does not play dice

- Integrated circuits (ICs)

- Intelligence

- Intelligence

- Large-scale integrated circuits (LSIs)

- Light

- Louis de Broglie

- Max Planck

- Moore’s Law

- Particles

- Quantum

- Quantum bits (qubits)

- Quantum computers

- Quantum mechanics

- Report Series

- Schrödinger’s cat

- Science Report

- Silicon

- Superconducting circuits

- Superposition

- Transistors

- Traveling salesman problem

- Waves

- Writer

Yoshitaka Arafune

-

Science Writer

Yoshitaka Arafune started working as a science writer while studying at Tokyo University of Science. He has covered a wide range of fields, from cosmology to scientific phenomena experienced in daily life, collecting information, and writing articles. He hopes to convey the fun of science, with its new discoveries every day, to as many people as possible. His main publications include Understanding the Universe through Five Mysteries (published by Heibonsha) and Mysterious Facts about the Earth That You Will Want to Tell Everyone (published by Nagaoka Shoten).

Recent Articles

Recommended Articles

Loading...