- Semiconductor Technology Now

Technology

In a collaborative study between the Ministry of Defense and the National Defense Medical College, ST voice analysis was used along with blood tests to diagnose depression among Self-Defense Forces personnel dispatched on a disaster relief mission after the Great East Japan Earthquake in 2011. The study reports that the results obtained by the two methods were similar.

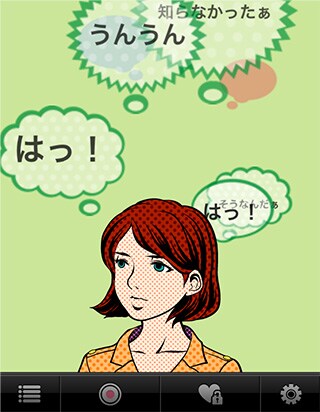

AGI is currently developing a system that combines conventional voice recognition with ST. “With conventional systems, recognition errors will only increase as the dictionary becomes larger, so compiling a huge and extensive dictionary won’t help,” says Mitsuyoshi. “Also, young people like to invent new words, which won’t be recognized by a dictionary-based system. With ST, it might become possible for the system to guess the approximate meaning of unknown words from the speaker’s expression, and keep learning new words on its own.”

|

Mitsuyoshi is also planning to develop within three years a quasi-self-aware system that recognizes users’ emotions and makes interactive responses. “We are becoming increasingly distrustful of others,” he continues. “Associating with a machine that can appreciate how you feel may perhaps help us get in touch with our humanity again.”

Becoming more than just a user interface

Voice recognition researchers have long been dreaming of turning the technology into artificial intelligence (AI), but the fact remains that voice recognition is still an auxiliary user interface at most. The recognition rate has already been good enough for practical use in selected fields, but adoption has been slow. Perhaps one of the reasons voice recognition hasn’t caught on is the lack of killer apps.

That may change with the rising popularity of Siri on iPhone. It may be the result of the manufacturer’s image boosting strategy, but its effects could be far-reaching. Previously, voice recognition was just one of the user interfaces—an alternative to mouse or keyboard—for using existing software and services. Such a perception was self-limiting, and therein was the problem.

Although the basic principles of voice recognition including HMM haven’t changed much for decades, it has become possible to sample huge amounts of data over the network, significantly improving the recognition rate.

And smartphones let us capture a wide range of data besides voice signals. Examples include location data from GPS and acceleration sensors, additional instructions issued with a touch on the screen, and ambient photos and videos. All these data draw a detailed picture about the user, which was not possible when voice signal was the only data handled by a phone. Other wearable devices such as Nike+ FuelBand will also provide data about the user’s pattern of life.

Similarly, voice recognition is not just a user interface but can also be a channel for collecting the data on users. Combined with various sensors and emotion recognition technology, voice recognition system will be able to make more pertinent, contextual response to users’ queries.

Voice recognition has had only limited use as a standalone technology. As it has been integrated into devices that users carry all the time, however, it now has a real shot at evolving into an agent powered by AI.

Writer

Tatsuya Yamaji

Born in 1970, Yamaji worked as a magazine editor before going freelance as writer/editor. His areas of expertise include IT, science, and the environment. Books in Japanese by Yamaji include The Advent of the Inkjet Age (with a coauthor), Japanese Eco Technology Changes the World, and Dangen (with a coauthor)

Twitter account: @Tats_y